helpfulFuchsiaAmphibianmain.tsx9 matches

45function App() {6const [generatedImage, setGeneratedImage] = useState("");7const [audioDescription, setAudioDescription] = useState("AI continues to revolutionize audio generation with innovative technologies in music composition, voice synthesis, and sound design.");8const [isRolling, setIsRolling] = useState(false);26setAudioDescription(audioDescCompletion.choices[0].message.content || "AI continues to revolutionize audio generation with innovative technologies in music composition, voice synthesis, and sound design.");2728// Fetch AI-generated image29const imageUrl = "https://maxm-imggenurl.web.val.run/AI technology visualization with abstract digital elements representing machine learning and neural networks";30const response = await fetch(imageUrl);3132if (!response.ok) {33throw new Error("Image generation failed");34}3536setGeneratedImage(imageUrl);37} catch (error) {38console.error("Error fetching AI content:", error);39setGeneratedImage("https://maxm-imggenurl.web.val.run/AI technology visualization with abstract digital elements representing machine learning and neural networks");40}41}131</li>132<li>133<a href="#images">AI Visualization</a>134</li>135<li>222}223224.image-description {225margin-top: 15px;226padding: 15px;

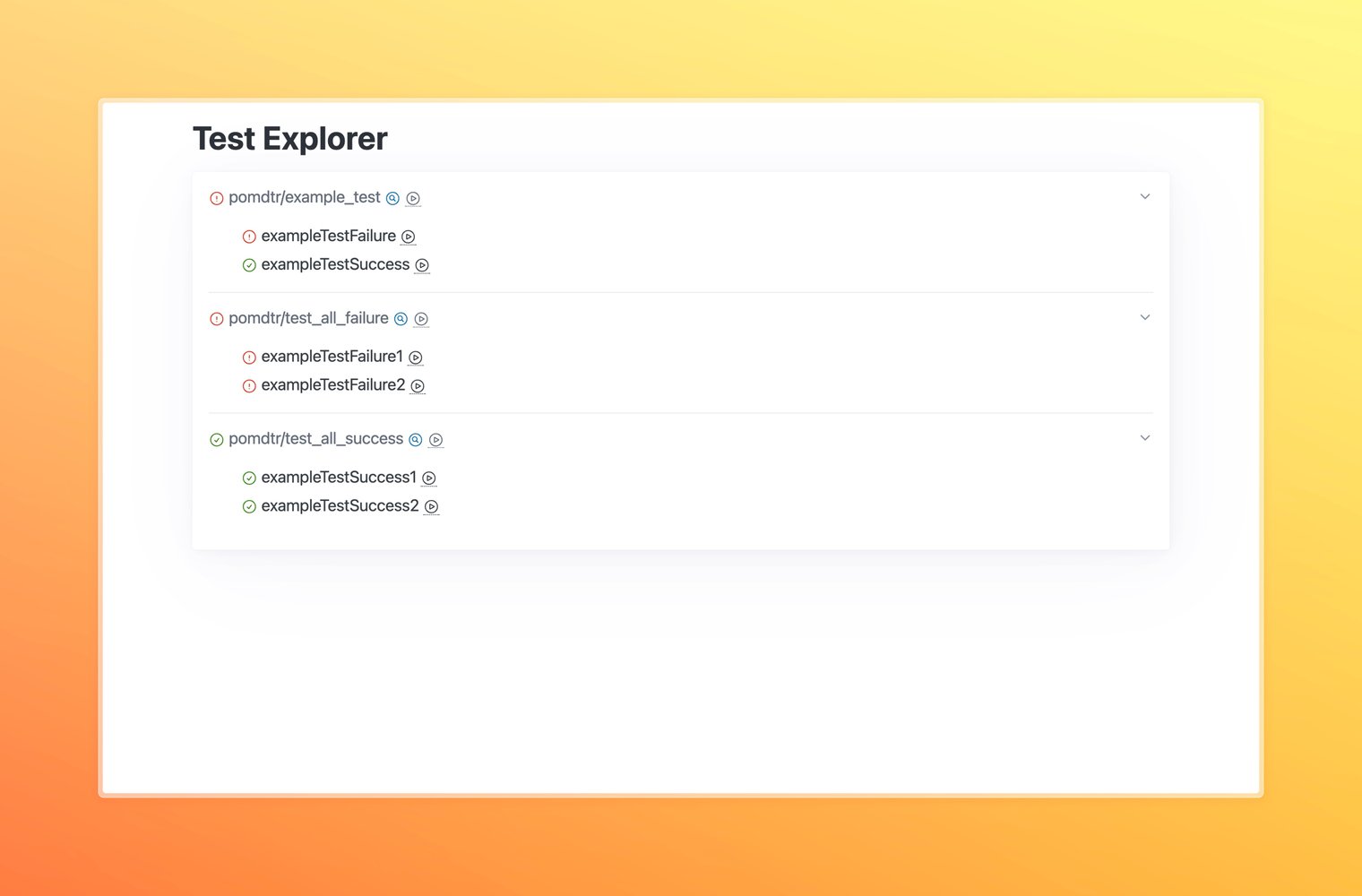

test_explorerREADME.md1 match

1# Test Explorer2345Click on the play button next to list items to run them.

515<meta charset="UTF-8">516<meta name="viewport" content="width=device-width, initial-scale=1.0">517<link rel="icon" type="image/png" href="https://labspace.ai/ls2-circle.png" />518<title>Breakdown</title>519<meta property="og:title" content="Breakdown" />520<meta property="og:description" content="Break down topics into different perspectives and arguments with AI-powered balanced analysis" />521<meta property="og:image" content="https://yawnxyz-og.web.val.run/img2?link=https://breakdown.labspace.ai&title=Breakdown&subtitle=Balanced+perspective+analysis&panelWidth=700&attachment=https://f2.phage.directory/capsid/20bEQGy3/breakdown-scr2_public.png" />522<meta property="og:url" content="https://breakdown.labspace.ai/" />523<meta property="og:type" content="website" />524<meta name="twitter:card" content="summary_large_image" />525<meta name="twitter:title" content="Breakdown" />526<meta name="twitter:description" content="Break down topics into different perspectives and arguments with AI-powered balanced analysis" />527<meta name="twitter:image" content="https://yawnxyz-og.web.val.run/img2?link=https://breakdown.labspace.ai&title=Breakdown&subtitle=Balanced+perspective+analysis&panelWidth=700&attachment=https://f2.phage.directory/capsid/20bEQGy3/breakdown-scr2_public.png" />528529<script src="https://cdn.tailwindcss.com"></script>

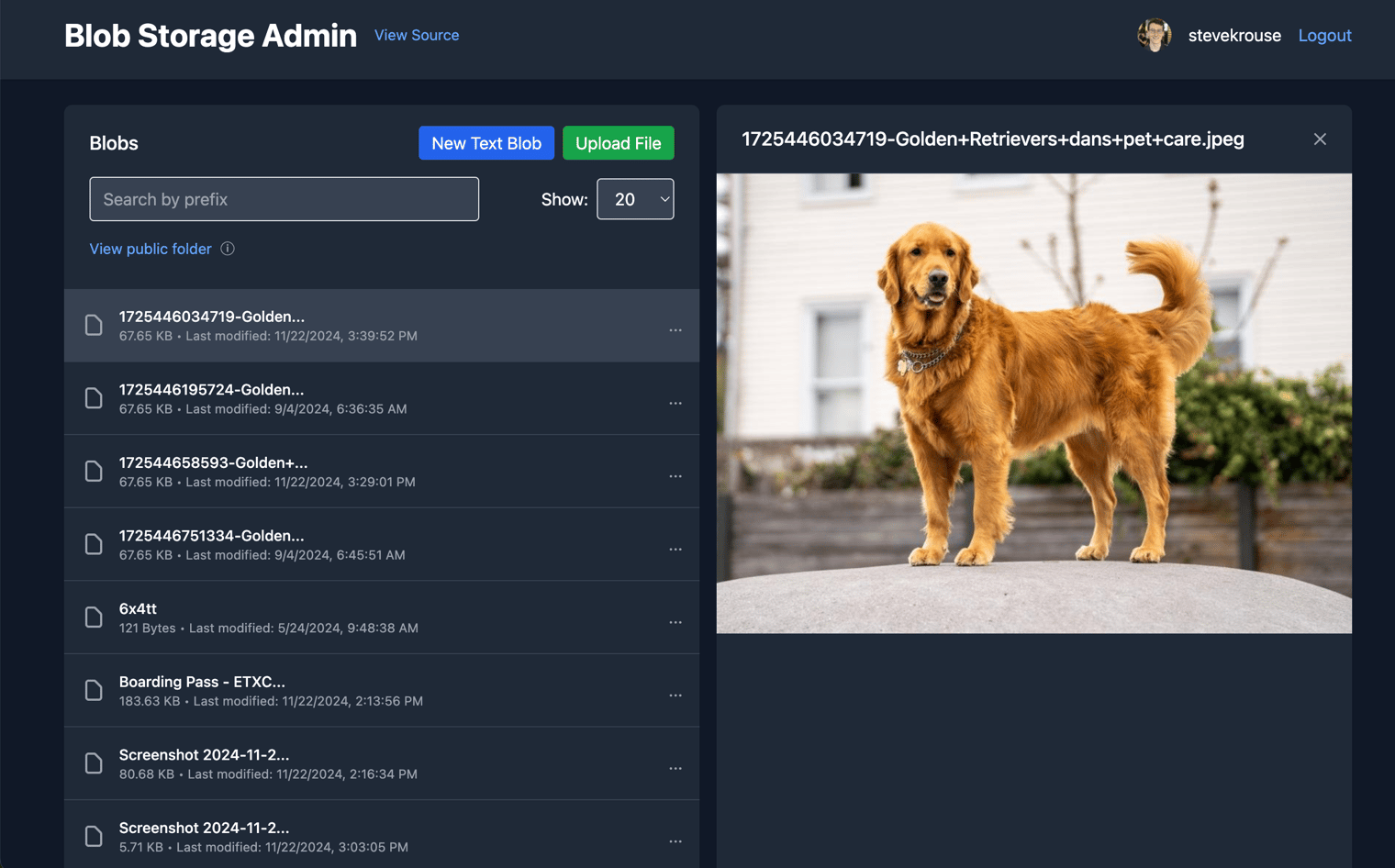

blob_adminREADME.md1 match

3This is a lightweight Blob Admin interface to view and debug your Blob data.4567Versions 0-17 of this val were done with Hono and server-rendering.

187<div key={index} className="bg-white shadow-md rounded px-4 sm:px-8 pt-6 pb-8 mb-8">188<h3 className="text-2xl font-bold mb-4 text-indigo-500">{recipe.name}</h3>189{recipe.image && (190<div className="mb-6">191<img src={recipe.image} alt={recipe.name} className="w-full h-64 object-cover rounded-lg" />192</div>193)}270const recipes = JSON.parse(jsonContent);271272// Generate images for each recipe273const recipesWithImages = await Promise.all(274recipes.map(async (recipe) => {275try {276const imageUrl = await generateImage(recipe.name);277return { ...recipe, image: imageUrl };278} catch (error) {279console.error(`Failed to generate image for ${recipe.name}:`, error);280return { ...recipe, image: null };281}282})283);284285return new Response(JSON.stringify({ recipes: recipesWithImages }), {286headers: { "Content-Type": "application/json" },287});308<meta property="og:title" content="Lazy Cook">309<meta property="og:description" content="If you don't feel like going through your recipe books, Lazy Cook to the rescue!">310<meta property="og:image" content="https://opengraph.b-cdn.net/production/images/90b45fbc-3605-4722-97d5-c707f94488ca.jpg?token=QsSAtAFq7L3G7tMgFKZa1vzT5hYhPOAsXtgLfWUE5zk&height=800&width=1200&expires=33269333680">311312<!-- Twitter Meta Tags -->313<meta name="twitter:card" content="summary_large_image">314<meta property="twitter:domain" content="karkowg-lazycook.web.val.run">315<meta property="twitter:url" content="https://karkowg-lazycook.web.val.run/">316<meta name="twitter:title" content="Lazy Cook">317<meta name="twitter:description" content="If you don't feel like going through your recipe books, Lazy Cook to the rescue!">318<meta name="twitter:image" content="https://opengraph.b-cdn.net/production/images/90b45fbc-3605-4722-97d5-c707f94488ca.jpg?token=QsSAtAFq7L3G7tMgFKZa1vzT5hYhPOAsXtgLfWUE5zk&height=800&width=1200&expires=33269333680">319320<!-- Meta Tags Generated via https://www.opengraph.xyz -->338}339340async function generateImage(recipeName: string): Promise<string> {341const response = await fetch(`https://maxm-imggenurl.web.val.run/${encodeURIComponent(recipeName)}`);342if (!response.ok) {343throw new Error(`Failed to generate image for ${recipeName}`);344}345return response.url;

socialDataSearchmain.tsx1 match

57created_at: string;58profile_banner_url: string;59profile_image_url_https: string;60can_dm: boolean;61}

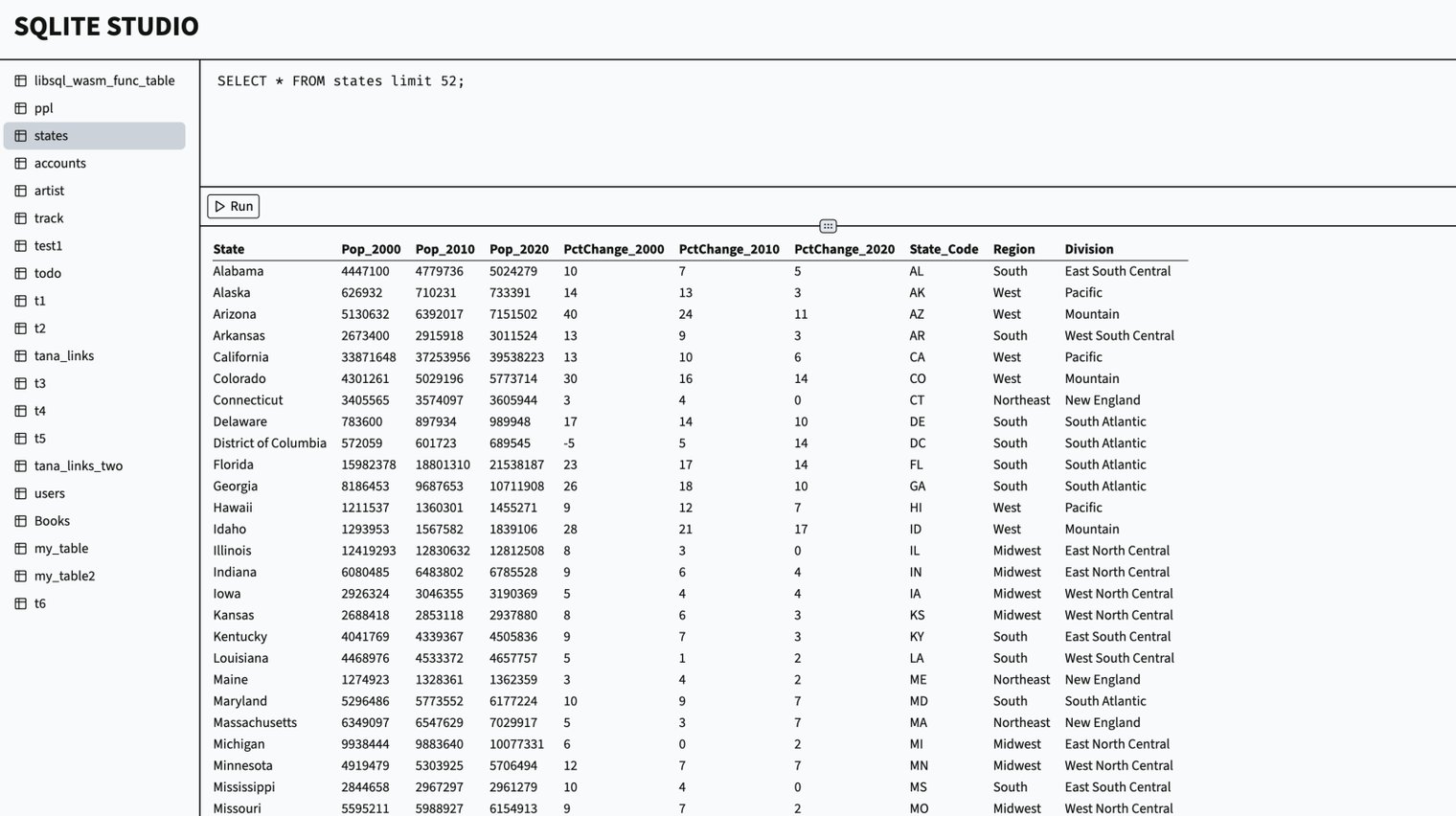

sqliteExplorerAppREADME.md1 match

3View and interact with your Val Town SQLite data. It's based off Steve's excellent [SQLite Admin](https://www.val.town/v/stevekrouse/sqlite_admin?v=46) val, adding the ability to run SQLite queries directly in the interface. This new version has a revised UI and that's heavily inspired by [LibSQL Studio](https://github.com/invisal/libsql-studio) by [invisal](https://github.com/invisal). This is now more an SPA, with tables, queries and results showing up on the same page.4567## Install

forEmulated1main.tsx23 matches

3import { createRoot } from "https://esm.sh/react-dom/client";4import debounce from "https://esm.sh/lodash.debounce";5import imageCompression from "https://esm.sh/browser-image-compression";67function App() {11const [editPrompt, setEditPrompt] = useState('');12const [isRefining, setIsRefining] = useState(false);13const [lastUploadedImage, setLastUploadedImage] = useState(null);14const [showCopiedMessage, setShowCopiedMessage] = useState(false);15const [theme, setTheme] = useState(() => {42}, []);4344const generateLandingPage = async (isInitial = true, imageBase64 = null, existingCode = '', editPrompt = '') => {45setIsLoading(true);46setIsRefining(isInitial ? false : true);53body: JSON.stringify({54isInitial,55imageBase64,56existingCode,57editPrompt78};7980const compressImage = async (file) => {81const options = {82maxSizeMB: 1,85};86try {87const compressedFile = await imageCompression(file, options);88return compressedFile;89} catch (error) {90console.error("Error compressing image:", error);91return file;92}93};9495const handleImageUpload = async (event) => {96const file = event.target.files[0];97if (file) {98const compressedFile = await compressImage(file);99const reader = new FileReader();100reader.onloadend = () => {101const base64 = reader.result.split(',')[1];102setLastUploadedImage(base64);103generateLandingPage(true, base64);104};108109const handleRegenerate = () => {110if (lastUploadedImage) {111generateLandingPage(true, lastUploadedImage);112} else {113alert("Please upload an image first before regenerating.");114}115};211<input212type="file"213accept="image/*"214ref={fileInputRef}215onChange={handleImageUpload}216className="hidden"217/>221disabled={isLoading}222>223{isLoading ? 'Generating...' : 'Upload Design Image'}224</button>225<button226onClick={handleRegenerate}227className="bg-green-500 text-white px-4 py-2 rounded dark:bg-green-600 hover:bg-green-600 dark:hover:bg-green-700 transition-colors"228disabled={isLoading || !lastUploadedImage}229>230Regenerate325const openai = new OpenAI();326327const { isInitial, imageBase64, existingCode, editPrompt } = await request.json();328329console.log('Refinement Request Details:', {330isInitial,331imageBase64: imageBase64 ? `${imageBase64.slice(0, 50)}...` : null,332existingCodeLength: existingCode ? existingCode.length : 0,333editPrompt336let designDescription = '';337338if (isInitial && imageBase64) {339const visionResponse = await openai.chat.completions.create({340model: "gpt-4-vision-preview",345{346type: "text",347text: "Analyze this landing page design image in detail. Focus on the following aspects:\n\n1. Overall layout structure (header, main sections, footer)\n2. Color scheme (primary, secondary, accent colors)\n3. Typography (font styles, sizes, hierarchy)\n4. Key visual elements (images, icons, illustrations)\n5. Call-to-action buttons and their placement\n6. Navigation menu structure\n7. Content sections and their arrangement\n8. Mobile responsiveness considerations\n9. Any unique or standout design features\n\nProvide a comprehensive description that a web developer could use to accurately recreate this design using HTML and Tailwind CSS."348},349{350type: "image_url",351image_url: { url: `data:image/jpeg;base64,${imageBase64}` }352}353]

noCollectionTrackerREADME.md2 matches

113. **Reflection Stage**: After 5 "No" responses, users enter a reflection stage to contemplate their journey.124. **Motivational Quotes**: Dynamic quotes change based on the user's progress to provide encouragement.135. **Journey Visualization**: Users can upload a symbolic image representing their journey.146. **PDF Generation**: At the end of the challenge, users can generate a PDF summary of their journey.157. **Data Privacy**: All data is stored locally on the user's device, ensuring privacy and security.252. **Reflection Stage**:26- Triggered after receiving 5 "No" responses.27- Users can upload a symbolic image and write a comprehensive reflection.28293. **Completion Stage**:

md_links_resolvermain.tsx1 match

220<title>Markdown Links Resolver</title>221<meta name="viewport" content="width=device-width, initial-scale=1">222<link rel="icon" type="image/svg+xml" href="data:image/svg+xml,<svg xmlns='http://www.w3.org/2000/svg' viewBox='0 0 256 256'><text font-size='200' x='50%' y='50%' text-anchor='middle' dominant-baseline='central'>🔗</text></svg>">223<style>224body, html {