yuktiVoiceAssistantmain.tsx53 matches

5function App() {6const [messages, setMessages] = useState([7{ role: 'assistant', content: "Hi! I'm Yukti, your friendly female AI assistant. I can help you chat or generate images! How can I assist you today? 👩💻" }8]);9const [inputText, setInputText] = useState('');10const [isLoading, setIsLoading] = useState(false);11const [mode, setMode] = useState<'chat' | 'image'>('chat');12const [generatedImages, setGeneratedImages] = useState<string[]>([]);13const [imageStyle, setImageStyle] = useState<string>('photorealistic');14const [imageCount, setImageCount] = useState<number>(2);15const messagesEndRef = useRef(null);1643setInputText('');44setIsLoading(true);45setGeneratedImages([]);4647try {48const response = await fetch(mode === 'chat' ? '/chat' : '/generate-image', {49method: 'POST',50body: JSON.stringify({51messages: [...messages, newUserMessage],52prompt: inputText,53style: imageStyle,54count: imageCount55})56});69synthesizeSpeech(assistantResponse);70} else {71// Ensure imageUrls is always an array, even if undefined72const imageUrls = Array.isArray(data.imageUrls) ? data.imageUrls : [];73setGeneratedImages(imageUrls);74const imageMessage = {75role: 'assistant',76content: imageUrls.length > 077? `I've generated ${imageUrls.length} ${imageStyle} images for "${inputText}"`78: "Sorry, I couldn't generate any images."79};80setMessages(prev => [...prev, imageMessage]);81}82} catch (error) {9596const toggleMode = () => {97setMode(prev => prev === 'chat' ? 'image' : 'chat');98setGeneratedImages([]);99};100101const imageStyles = [102'photorealistic',103'digital art',109];110111const imageCountOptions = [1, 2, 3, 4];112113return (115<div className="chat-header">116<h1>Yukti 👩💻</h1>117<p>{mode === 'chat' ? 'Chat Mode' : 'Image Generation Mode'}</p>118</div>119{mode === 'image' && (121<div className="image-controls">122<div className="style-selector">123<label>Image Style:</label>124<select125value={imageStyle}126onChange={(e) => setImageStyle(e.target.value)}127>128{imageStyles.map(style => (129<option key={style} value={style}>{style}</option>130))}132</div>133<div className="count-selector">134<label>Number of Images:</label>135<select136value={imageCount}137onChange={(e) => setImageCount(Number(e.target.value))}138>139{imageCountOptions.map(count => (140<option key={count} value={count}>{count}</option>141))}154</div>155))}156{generatedImages.length > 0 && (157<div className="message assistant image-result">158<div className="image-gallery">159{generatedImages.map((imageUrl, index) => (160<img161key={index}162src={imageUrl}163alt={`Generated image ${index + 1}`}164className="generated-image"165/>166))}180onClick={toggleMode}181className="mode-toggle-inline"182title={mode === 'chat' ? 'Switch to Image Generation' : 'Switch to Chat'}183>184{mode === 'chat' ? '🖼️' : '💬'}188value={inputText}189onChange={(e) => setInputText(e.target.value)}190placeholder={mode === 'chat' ? "Talk to Yukti..." : "Describe the image you want..."}191disabled={isLoading}192/>257}258259if (url.pathname === '/generate-image') {260// Verify images method exists261if (!openai.images || !openai.images.generate) {262throw new Error("OpenAI object does not have the expected image generation method");263}264266const { prompt, style, count } = body;267268const imageResponse = await openai.images.generate({269model: "dall-e-3",270prompt: `A ${style} image of: ${prompt}.271High-quality, detailed, and visually appealing composition.`,272n: count,274});275276const imageUrls = imageResponse.data?.map(img => img.url) || [];277278return new Response(JSON.stringify({ imageUrls }), {279headers: { 'Content-Type': 'application/json' }280});303<html>304<head>305<title>Yukti - AI Assistant with Image Generation</title>306<style>${css}</style>307</head>395}396397.image-gallery {398display: flex;399flex-wrap: wrap;402}403404.generated-image {405max-width: 200px;406max-height: 200px;409}410411.image-controls {412display: flex;413justify-content: space-around;

huntTheWumpusWebVersionmain.tsx24 matches

68const [message, setMessage] = useState("Welcome to Hunt the Wumpus! Kill the Wumpus to reveal the treasure!");69const [gameState, setGameState] = useState('playing');70const [wumpusImageUrl, setWumpusImageUrl] = useState(null);71const [pitImageUrl, setPitImageUrl] = useState(null);72const [winImageUrl, setWinImageUrl] = useState(null);73const [lossReason, setLossReason] = useState(null);7475// Generate a dancing Wumpus image when game is lost to Wumpus76useEffect(() => {77if (gameState === 'lost' && lossReason === 'wumpus') {78const imageDescription = "a mischievous wumpus monster dancing victoriously, cartoon style, transparent background";79const encodedDesc = encodeURIComponent(imageDescription);80const imageUrl = `https://maxm-imggenurl.web.val.run/${encodedDesc}`;81setWumpusImageUrl(imageUrl);82}83}, [gameState, lossReason]);8485// Generate a pit fall image when falling into a pit86useEffect(() => {87if (gameState === 'lost' && lossReason === 'pit') {88const imageDescription = "a cartoon character falling down a deep dark pit, comical falling pose, transparent background";89const encodedDesc = encodeURIComponent(imageDescription);90const imageUrl = `https://maxm-imggenurl.web.val.run/${encodedDesc}`;91setPitImageUrl(imageUrl);92}93}, [gameState, lossReason]);9495// Generate a winning celebration image96useEffect(() => {97if (gameState === 'won') {98const imageDescription = "heroic adventurer celebrating victory with treasure, cartoon style, epic pose, confetti in background";99const encodedDesc = encodeURIComponent(imageDescription);100const imageUrl = `https://maxm-imggenurl.web.val.run/${encodedDesc}`;101setWinImageUrl(imageUrl);102}103}, [gameState]);206setMessage("New game started! Kill the Wumpus to reveal the treasure!");207setGameState('playing');208setWumpusImageUrl(null);209setPitImageUrl(null);210setWinImageUrl(null);211setLossReason(null);212};230}}>231<img232src={pitImageUrl}233alt="Falling into Pit"234style={{273}}>274<img275src={wumpusImageUrl}276alt="Dancing Wumpus"277style={{379))}380<img381src={winImageUrl}382alt="Victory Celebration"383style={{

createMovieSitemain.tsx5 matches

42function getMoviePosterUrl(movie) {43return movie.poster_path44? `https://image.tmdb.org/t/p/w300${movie.poster_path}`45: movie.poster_path_season46? `https://image.tmdb.org/t/p/w300${movie.poster_path_season}`47: `https://cdn.zoechip.to/${movie.img ? "/data" + movie.img?.split("data")?.pop() : "images/no_poster.jpg"}`;48}49237src="${238movie.backdrop_path239? `https://image.tmdb.org/t/p/w1280${movie.backdrop_path}`240: `https://cdn.zoechip.to/${movie.img ? "/data" + movie.img?.split("data")?.pop() : "images/no_poster.jpg"}`241}"242class="w-full h-64 object-cover rounded-lg mb-4"

plantIdentifierAppmain.tsx27 matches

26bloomSeason: 'Late spring to early summer',27height: '4-6 feet (1.2-1.8 meters)',28imageUrl: 'https://maxm-imggenurl.web.val.run/damask-rose-detailed'29},30'Monstera deliciosa': {48bloomSeason: 'Rarely blooms indoors',49height: '10-15 feet (3-4.5 meters) in natural habitat',50imageUrl: 'https://maxm-imggenurl.web.val.run/monstera-deliciosa'51}52};58<div className="flex items-center mb-4">59<img60src={plant.imageUrl}61alt={plant.commonName}62className="w-24 h-24 object-cover rounded-full mr-4 shadow-md"119reader.readAsDataURL(file);120reader.onloadend = async () => {121const base64Image = reader.result.split(',')[1];122123const openai = new OpenAI({135{136type: "text",137text: "Identify the plant species in this image with high accuracy. Provide the scientific name, common name, and a detailed description. Include key identifying characteristics. If you cannot confidently identify the plant, explain why."138},139{140type: "image_url",141image_url: {142url: `data:image/jpeg;base64,${base64Image}`143}144}185bloomSeason: 'Bloom season varies',186height: 'Height varies by specific variety',187imageUrl: 'https://maxm-imggenurl.web.val.run/unknown-plant'188};189194});195} else {196reject(new Error('Unable to extract plant details from the image'));197}198} catch (apiError) {203204reader.onerror = (error) => {205reject(new Error(`Image reading error: ${error}`));206};207} catch (error) {213// Main Plant Identifier Application Component214function PlantIdentifierApp() {215const [selectedImage, setSelectedImage] = useState(null);216const [plantInfo, setPlantInfo] = useState(null);217const [identificationError, setIdentificationError] = useState(null);223const canvasRef = useRef(null);224225const handleImageUpload = async (event) => {226const file = event.target.files[0];227processImage(file);228};229230const processImage = async (file) => {231if (file) {232setSelectedImage(URL.createObjectURL(file));233setIsLoading(true);234setIdentificationError(null);328context.scale(-1, 1);330context.drawImage(video, -canvas.width, 0, canvas.width, canvas.height);331context.setTransform(1, 0, 0, 1, 0, 0);334canvas.toBlob((blob) => {335if (blob) {336processImage(new File([blob], 'captured-plant.jpg', { type: 'image/jpeg' }));337} else {338setCameraError("Failed to create photo blob.");339}340}, 'image/jpeg');341}342}, [processImage]);343344const resetCapture = () => {345setSelectedImage(null);346setPlantInfo(null);347setIdentificationError(null);381)}382383{!captureMode && !selectedImage && (384<div className="space-y-4">385<input386type="file"387accept="image/*"388onChange={handleImageUpload}389className="hidden"390id="file-upload"394className="w-full bg-green-500 text-white py-3 rounded-lg text-center cursor-pointer hover:bg-green-600 transition duration-300 flex items-center justify-center"395>396📤 Upload Image397</label>398<button442)}443444{selectedImage && (445<div className="mt-4 animate-fade-in">446<img447src={selectedImage}448alt="Selected plant"449className="w-full rounded-lg shadow-md mb-4"

17"settings": {18"url": url,19"optionStr": "content,metadata,screenshot,pageshot,citation,doi,images,links",20"crawlMode": "jina",21"outputMode": "json"194title: '',195description: '',196image: '',197pageshotUrl: '',198screenshotUrl: ''329const title = metadata.title || metadata['og:title'] || metadata['twitter:title'] || 'No title available';330const description = metadata.description || metadata['og:description'] || metadata['twitter:description'] || 'No description available';331const image = metadata['og:image'] || metadata['twitter:image'] || '';332const pageshotUrl = result.jina?.pageshot || '';333const screenshotUrl = result.jina?.screenshot || '';339title,340description,341image,342pageshotUrl: result.jina?.pageshot,343screenshotUrl: result.jina?.screenshot398<meta charset="UTF-8">399<meta name="viewport" content="width=device-width, initial-scale=1.0">400<link rel="icon" type="image/png" href="https://labspace.ai/ls2-circle.png" />401<title>PageShot — Get data about any web page</title>402<meta property="og:title" content="PageShot — Get data about any web page" />403<meta property="og:description" content="Get data about any web page" />404<meta property="og:image" content="https://yawnxyz-og.web.val.run/img?link=https://pageshot.labspace.ai&title=PageShot&subtitle=Get+data+about+any+web+page" />405<meta property="og:url" content="https://pageshot.labspace.ai" />406<meta property="og:type" content="website" />407<meta name="twitter:card" content="summary_large_image" />408<meta name="twitter:title" content="PageShot — Get data about any web page" />409<meta name="twitter:description" content="Get data about any web page" />410<meta name="twitter:image" content="https://yawnxyz-og.web.val.run/img?link=https://pageshot.labspace.ai&title=PageShot&subtitle=Get+data+about+any+web+page" />411<script src="https://cdn.tailwindcss.com"></script>412<script src="https://unpkg.com/dexie@3.2.2/dist/dexie.js"></script>448<div class="OutputHeader bg-app-bg-alt p-4 rounded-lg mb-4">449<div class="rounded-lg mb-4" x-show="metadata.title">450<template x-if="metadata.image">451<img :src="metadata.image.startsWith('http') ? metadata.image : new URL(metadata.image, input).href"452alt="Page preview"453class="max-w-48 h-auto rounded mt-2 mb-4">

captivatingLimeGuanmain.tsx33 matches

25bloomSeason: 'Late spring to early summer',26height: '4-6 feet (1.2-1.8 meters)',27imageUrl: 'https://maxm-imggenurl.web.val.run/damask-rose-detailed'28},29'Monstera deliciosa': {47bloomSeason: 'Rarely blooms indoors',48height: '10-15 feet (3-4.5 meters) in natural habitat',49imageUrl: 'https://maxm-imggenurl.web.val.run/monstera-deliciosa'50},51'Ficus lyrata': {68bloomSeason: 'Does not typically bloom indoors',69height: '6-10 feet (1.8-3 meters) indoors',70imageUrl: 'https://maxm-imggenurl.web.val.run/fiddle-leaf-fig'71}72};7374// Plant Identification Service (Simulated with more sophisticated matching)75async function identifyPlant(imageFile) {76return new Promise((resolve, reject) => {77// Simulate API call with image processing78const reader = new FileReader();79reader.onloadend = () => {80// Basic image analysis simulation81const imageData = reader.result;82// Sophisticated matching logic84const matchConfidences = {85'Rosa damascena': calculateImageSimilarity(imageData, 'rose'),86'Monstera deliciosa': calculateImageSimilarity(imageData, 'monstera'),87'Ficus lyrata': calculateImageSimilarity(imageData, 'fiddle-leaf')88};89101}102};103reader.readAsDataURL(imageFile);104});105}106107// Simulated image similarity calculation108function calculateImageSimilarity(imageData, plantType) {109// In a real scenario, this would use machine learning techniques110const randomFactor = Math.random() * 0.4 + 0.3; // Base randomness111const typeSpecificBoost = {113'rose': imageData.includes('flower') ? 0.3 : 0,114'monstera': imageData.includes('large-leaf') ? 0.3 : 0,115'fiddle-leaf': imageData.includes('violin-shape') ? 0.3 : 0116};117124<div className="flex items-center mb-4">125<img126src={plant.imageUrl}127alt={plant.commonName}128className="w-24 h-24 object-cover rounded-full mr-4 shadow-md"160161function PlantIdentifierApp() {162const [selectedImage, setSelectedImage] = useState(null);163const [plantInfo, setPlantInfo] = useState(null);164const [identificationError, setIdentificationError] = useState(null);168const canvasRef = useRef(null);169170const handleImageUpload = async (event) => {171const file = event.target.files[0];172processImage(file);173};174175const processImage = async (file) => {176if (file) {177setSelectedImage(URL.createObjectURL(file));178setIsLoading(true);179setIdentificationError(null);209if (videoRef.current && canvasRef.current) {210const context = canvasRef.current.getContext('2d');211context.drawImage(videoRef.current, 0, 0, 400, 300);212canvasRef.current.toBlob((blob) => {214processImage(new File([blob], 'captured-plant.jpg', { type: 'image/jpeg' }));215}, 'image/jpeg');216}217}, [processImage]);218219const resetCapture = () => {220setSelectedImage(null);221setPlantInfo(null);222setIdentificationError(null);235<main>236{/* Previous implementation remains the same */}237{!captureMode && !selectedImage && (238<div className="space-y-4">239<input240type="file"241accept="image/*"242onChange={handleImageUpload}243className="hidden"244id="file-upload"248className="w-full bg-green-500 text-white py-3 rounded-lg text-center cursor-pointer hover:bg-green-600 transition duration-300 flex items-center justify-center"249>250📤 Upload Image251</label>252<button305)}306307{selectedImage && (308<div className="mt-4 animate-fade-in">309<img310src={selectedImage}311alt="Selected plant"312className="w-full rounded-lg shadow-md mb-4"

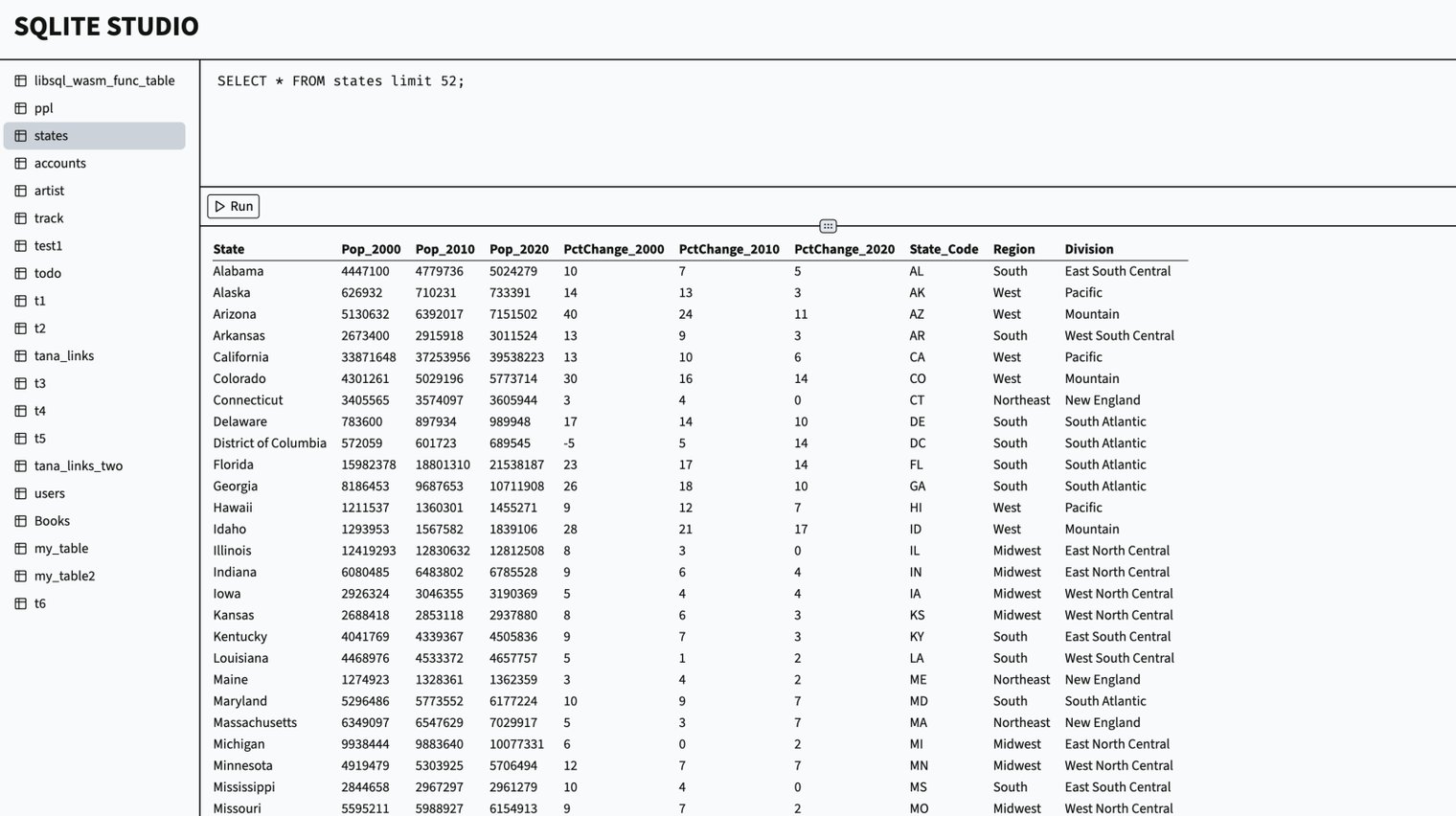

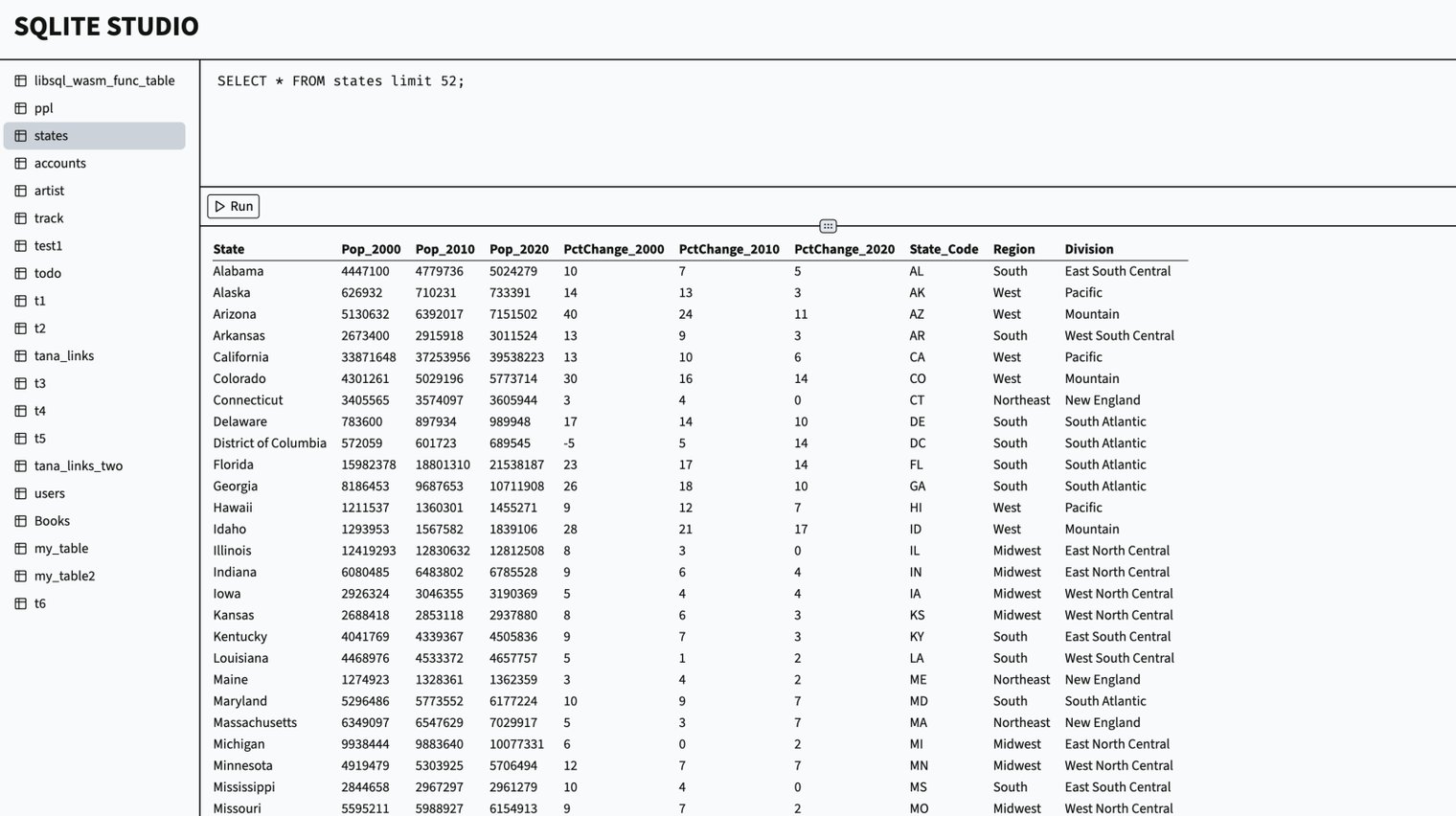

sqliteExplorerAppREADME.md1 match

3View and interact with your Val Town SQLite data. It's based off Steve's excellent [SQLite Admin](https://www.val.town/v/stevekrouse/sqlite_admin?v=46) val, adding the ability to run SQLite queries directly in the interface. This new version has a revised UI and that's heavily inspired by [LibSQL Studio](https://github.com/invisal/libsql-studio) by [invisal](https://github.com/invisal). This is now more an SPA, with tables, queries and results showing up on the same page.4567## Install

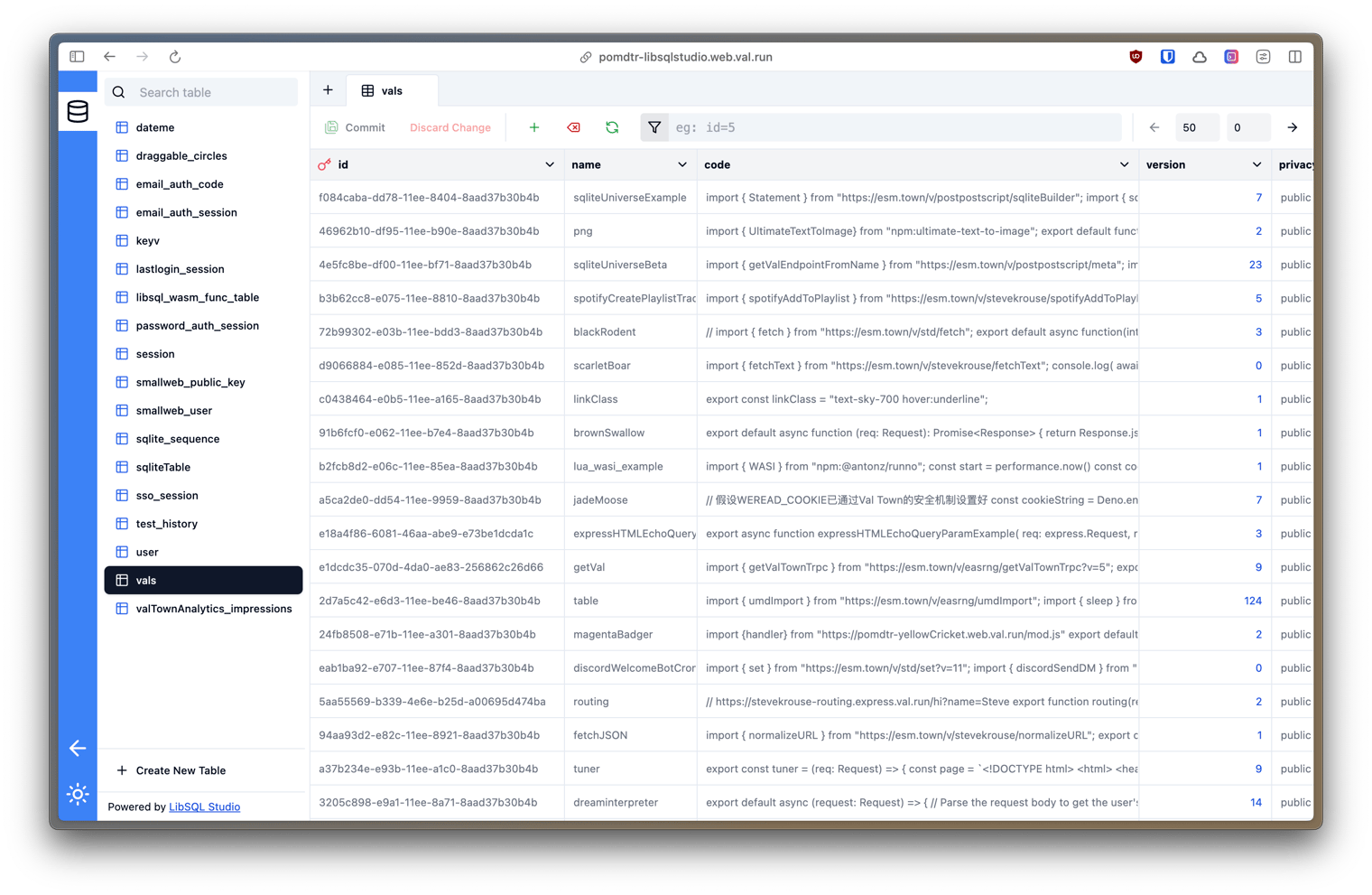

libsqlstudioREADME.md1 match

1# LibSQLStudio2345I'll write down the instructions tomorrow. The short version is:

sqliteExplorerAppREADME.md1 match

3View and interact with your Val Town SQLite data. It's based off Steve's excellent [SQLite Admin](https://www.val.town/v/stevekrouse/sqlite_admin?v=46) val, adding the ability to run SQLite queries directly in the interface. This new version has a revised UI and that's heavily inspired by [LibSQL Studio](https://github.com/invisal/libsql-studio) by [invisal](https://github.com/invisal). This is now more an SPA, with tables, queries and results showing up on the same page.4567## Install

thrillingAmaranthKiwimain.tsx3 matches

357title: location,358icon: {359url: 'data:image/svg+xml;utf8,<svg xmlns="http://www.w3.org/2000/svg" width="40" height="40" viewBox="0 0 24 24" fill="%23FF453A"><path d="M12 2c-5.33 4 0 10 0 10s5.33-6 0-10z"/></svg>',360scaledSize: new window.google.maps.Size(40, 40)361}369title: vet.name,370icon: {371url: 'data:image/svg+xml;utf8,<svg xmlns="http://www.w3.org/2000/svg" width="30" height="30" viewBox="0 0 24 24" fill="%2300E461"><path d="M12 2l3.09 6.26L22 9.27l-5 4.87 1.18 6.88L12 17.77l-6.18 3.25L7 14.14 2 9.27l6.91-1.01L12 2z"/></svg>',372scaledSize: new window.google.maps.Size(30, 30)373}553backgroundColor: theme.colors.background,554minHeight: '100vh',555backgroundImage: 'linear-gradient(to bottom, rgba(13,19,33,0.8), rgba(13,19,33,1))'556}}557>