blob_adminREADME.md1 match

3This is a lightweight Blob Admin interface to view and debug your Blob data.4567To use this, fork it to your account.

blob_adminmain.tsx2 matches

60const { ValTown } = await import("npm:@valtown/sdk");61const vt = new ValTown();62const { email: authorEmail, profileImageUrl, username } = await vt.me.profile.retrieve();63// const authorEmail = me.email;64128129c.set("email", email);130c.set("profile", { profileImageUrl, username });131await next();132};

blob_adminapp.tsx3 matches

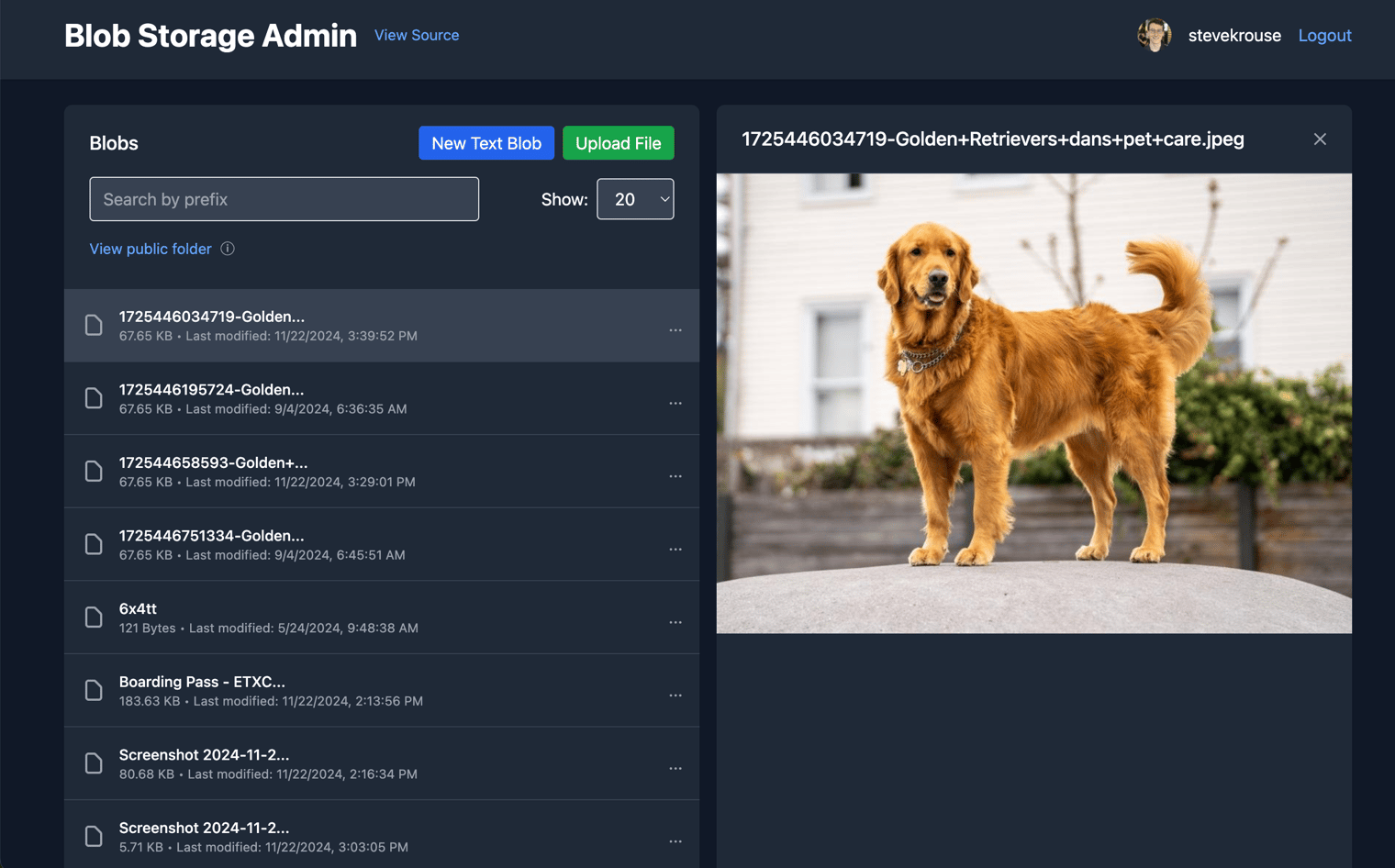

437{profile && (438<div className="flex items-center space-x-4">439<img src={profile.profileImageUrl} alt="Profile" className="w-8 h-8 rounded-full" />440<span>{profile.username}</span>441<a href="/auth/logout" className="text-blue-400 hover:text-blue-300">Logout</a>580alt="Blob content"581className="max-w-full h-auto"582onError={() => console.error("Error loading image")}583/>584</div>630<li>Create public shareable links for blobs</li>631<li>View and manage public folder</li>632<li>Preview images directly in the interface</li>633</ul>634</div>

242. **Reference Scaling**: Uses known body proportions or user-provided reference measurements253. **Anthropometric Calculations**: Applies standard body measurement formulas264. **Real-time Processing**: Processes images and returns measurements instantly2728## Usage3536- **Backend**: Hono.js with pose detection and measurement calculations37- **Frontend**: React with image upload and results display38- **Computer Vision**: Currently uses mock pose detection (see integration guide below)39- **Calculations**: Anthropometric formulas for body measurements58## API Endpoints5960- `POST /api/measure` - Upload image and get body measurements61- `POST /api/report` - Download measurements as text file62- `GET /api/health` - Health check endpoint8889The system currently:90- ✅ Provides a complete web interface for image upload91- ✅ Validates image format and size92- ✅ Implements comprehensive measurement calculations93- ✅ Uses anthropometric formulas for accurate estimations105106Accuracy depends on:1071. **Photo quality**: Clear, well-lit, full-body images1082. **Pose quality**: Upright stance with arms slightly away from body1093. **Reference measurement**: Providing actual height significantly improves accuracy

Demoreal-pose-integration.ts19 matches

38}3940async detectPose(imageData: string): Promise<PoseLandmark[] | null> {41if (!this.initialized) {42await this.initialize();4445return new Promise((resolve) => {46// Convert base64 to image element47const img = new Image();48img.onload = () => {49// Create canvas and draw image50const canvas = document.createElement('canvas');51const ctx = canvas.getContext('2d');52canvas.width = img.width;53canvas.height = img.height;54ctx.drawImage(img, 0, 0);5556// Set up pose detection callback70});7172// Send image to MediaPipe73this.pose.send({ image: canvas });74};7576img.onerror = () => resolve(null);77img.src = imageData;78});79}102}103104async detectPose(imageData: string): Promise<PoseLandmark[] | null> {105// Convert base64 to tensor106const imageBuffer = Buffer.from(imageData.split(',')[1], 'base64');107const imageTensor = tf.node.decodeImage(imageBuffer, 3);108// Detect pose110const pose = await this.model.estimateSinglePose(imageTensor, {111flipHorizontal: false112});115if (pose.score > 0.5) {116const landmarks = pose.keypoints.map(keypoint => ({117x: keypoint.position.x / imageTensor.shape[1], // Normalize to 0-1118y: keypoint.position.y / imageTensor.shape[0], // Normalize to 0-1119z: 0, // PoseNet doesn't provide z-coordinate120visibility: keypoint.score121}));122imageTensor.dispose();124return landmarks;125}126127imageTensor.dispose();128return null;129}1871. MediaPipe for web applications (client-side processing)1882. TensorFlow.js PoseNet for server-side processing1893. Combine with OpenCV for additional image preprocessing190191Steps to implement:1921. Replace the mock PoseDetector with a real implementation1932. Add proper error handling for different image formats1943. Implement image preprocessing (resize, normalize, etc.)1954. Add confidence thresholds and validation1965. Consider caching models for better performance

4import { PoseDetector } from "./pose-detection.ts";5import { BodyMeasurementCalculator } from "./measurements.ts";6import { validateImageData, validateReferenceHeight, calculateConfidenceScore, generateMeasurementReport } from "./utils.ts";7import type { MeasurementRequest, MeasurementResponse } from "../shared/types.ts";830apiEndpoint: '/api/measure',31maxFileSize: 10 * 1024 * 1024, // 10MB32supportedFormats: ['image/jpeg', 'image/png', 'image/webp']33};34</script>`;44// Validate input46const imageValidation = validateImageData(body.imageData);47if (!imageValidation.valid) {48return c.json<MeasurementResponse>({49success: false,50error: imageValidation.error51}, 400);52}61// Detect pose landmarks63const landmarks = await poseDetector.detectPose(body.imageData);64if (!landmarks) {65return c.json<MeasurementResponse>({66success: false,67error: "Failed to detect pose in the image. Please ensure the person is clearly visible and standing upright."68}, 400);69}99return c.json<MeasurementResponse>({100success: false,101error: "An error occurred while processing the image. Please try again."102}, 500);103}110// Validate input112const imageValidation = validateImageData(body.imageData);113if (!imageValidation.valid) {114return c.text(imageValidation.error || "Invalid image", 400);115}116// Detect pose and calculate measurements118const landmarks = await poseDetector.detectPose(body.imageData);119if (!landmarks) {120return c.text("Failed to detect pose in the image", 400);121}122endpoints: {157"POST /api/measure": {158description: "Calculate body measurements from an image",159body: {160imageData: "string (base64 encoded image)",161referenceHeight: "number (optional, height in cm)",162gender: "string (optional, 'male' or 'female')"185},186notes: [187"Images should be clear, full-body photos with the person standing upright",188"Supported formats: JPEG, PNG, WebP",189"Maximum file size: 10MB",

Demoindex.html30 matches

54background-color: #eff6ff;55}56.preview-image {57max-width: 100%;58max-height: 400px;9495const App = () => {96const [selectedImage, setSelectedImage] = useState(null);97const [imagePreview, setImagePreview] = useState(null);98const [measurements, setMeasurements] = useState(null);99const [loading, setLoading] = useState(false);104const fileInputRef = useRef(null);105106const handleImageSelect = useCallback((file) => {107if (!file) return;108109// Validate file type110if (!file.type.startsWith('image/')) {111setError('Please select a valid image file');112return;113}115// Validate file size (10MB)116if (file.size > 10 * 1024 * 1024) {117setError('Image size must be less than 10MB');118return;119}120121setError(null);122setSelectedImage(file);123124// Create preview125const reader = new FileReader();126reader.onload = (e) => {127setImagePreview(e.target.result);128};129reader.readAsDataURL(file);132const handleFileInput = (e) => {133const file = e.target.files[0];134handleImageSelect(file);135};136139e.stopPropagation();140const file = e.dataTransfer.files[0];141handleImageSelect(file);142};143148149const calculateMeasurements = async () => {150if (!selectedImage) {151setError('Please select an image first');152return;153}161const reader = new FileReader();162reader.onload = async (e) => {163const imageData = e.target.result;164const requestBody = {166imageData,167referenceHeight: referenceHeight ? parseFloat(referenceHeight) : undefined,168gender186}187};188reader.readAsDataURL(selectedImage);189} catch (err) {190setError('An error occurred while processing the image');191console.error('Measurement error:', err);192} finally {196197const downloadReport = async () => {198if (!selectedImage) return;199200try {201const reader = new FileReader();202reader.onload = async (e) => {203const imageData = e.target.result;204const requestBody = {206imageData,207referenceHeight: referenceHeight ? parseFloat(referenceHeight) : undefined,208gender231}232};233reader.readAsDataURL(selectedImage);234} catch (err) {235setError('Failed to download report');309// Upload area310React.createElement('div', { className: 'bg-white rounded-lg shadow-md p-6' },311React.createElement('h2', { className: 'text-xl font-semibold mb-4' }, 'Upload Image'),312!imagePreview ?314React.createElement('div', {315className: 'upload-area',328React.createElement('div', { className: 'text-center' },329React.createElement('img', {330src: imagePreview,331alt: 'Preview',332className: 'preview-image mx-auto mb-4'333}),334React.createElement('button', {335onClick: () => fileInputRef.current?.click(),336className: 'px-4 py-2 bg-blue-500 text-white rounded-md hover:bg-blue-600 transition-colors'337}, 'Change Image')338),339ref: fileInputRef,342type: 'file',343accept: 'image/*',344onChange: handleFileInput,345className: 'hidden'388React.createElement('button', {389onClick: calculateMeasurements,390disabled: !selectedImage || loading,391className: `w-full px-6 py-3 bg-blue-600 text-white rounded-md font-medium hover:bg-blue-700 disabled:bg-gray-400 disabled:cursor-not-allowed transition-colors flex items-center justify-center space-x-2`392},469React.createElement('h2', { className: 'text-xl font-semibold text-gray-700 mb-2' }, 'No Measurements Yet'),470React.createElement('p', { className: 'text-gray-500' },471'Upload an image and click "Calculate Measurements" to get started'472)473)485React.createElement('li', null, 'Person standing upright and straight'),486React.createElement('li', null, 'Arms slightly away from body'),487React.createElement('li', null, 'Good lighting and clear image'),488React.createElement('li', null, 'Minimal background clutter')489)

1// Utility functions for image processing and validation23export function validateImageData(imageData: string): { valid: boolean; error?: string } {4try {5// Check if it's a valid base64 string6if (!imageData.startsWith('data:image/')) {7return { valid: false, error: 'Invalid image format. Please upload a valid image.' };8}910// Extract the base64 part11const base64Data = imageData.split(',')[1];12if (!base64Data) {13return { valid: false, error: 'Invalid base64 image data.' };14}1525const maxSizeInMB = 10;26if (sizeInBytes > maxSizeInMB * 1024 * 1024) {27return { valid: false, error: `Image too large. Maximum size is ${maxSizeInMB}MB.` };28}2930return { valid: true };31} catch (error) {32return { valid: false, error: 'Failed to validate image data.' };33}34}

Demopose-detection.ts3 matches

13}1415async detectPose(imageData: string): Promise<PoseLandmark[] | null> {16if (!this.initialized) {17await this.initialize();21// For demonstration, we'll use a mock pose detection22// In production, you would:23// 1. Decode the base64 image24// 2. Process with MediaPipe Pose25// 3. Return the 33 pose landmarks134if (head.y > 0.1 || feet < 0.85) {136issues.push('Full body should be visible in the image');137}138