FarcasterSpacesindex.tsx3 matches

6import { handleAnalyticsEndpoints } from './analytics.tsx'7import { embedMetadata, handleFarcasterEndpoints, name } from './farcaster.ts'8import { handleImageEndpoints } from './image.tsx'9import { fetchNeynarGet } from './neynar.ts'1011const app = new Hono()1213handleImageEndpoints(app)14handleFarcasterEndpoints(app)15handleAgoraEndpoints(app)54<meta name="fc:frame" content={JSON.stringify(embedMetadata(baseUrl, path))} />55<link rel="icon" href={baseUrl + '/icon?rounded=1'} />56<meta property="og:image" content={baseUrl + '/image'} />57</head>58<body class="bg-white text-black dark:bg-black dark:text-white">

FarcasterSpacesimage.tsx16 matches

8import { fetchUsersById } from './neynar.ts'910export function handleImageEndpoints(app: Hono) {11const headers = {12'Content-Type': 'image/png',13'cache-control': 'public, max-age=3600', // 8640014}15app.get('/image', async (c) => {16const channel = c.req.query('channel')17if (channel) return c.body(await spaceImage(channel), 200, headers)18return c.body(await homeImage(), 200, headers)19})20app.get('/icon', async (c) => {21const rounded = !!c.req.query('rounded')22return c.body(await iconImage(rounded), 200, headers)23})24}2526export async function homeImage() {27return await ogImage(28<div tw="w-full h-full flex justify-start items-end text-[100px] bg-black text-white p-[50px]">29<div tw="flex flex-col items-start">37}3839export async function spaceImage(channel: string) {40const space = await fetchSpace(channel)41if (!space) return await homeImage()4243const users = await fetchUsersById([space.created_by, ...space.hosts, ...space.speakers].join(','))48const speakers = users?.filter((u) => speakerFids.includes(u.fid))4950return await ogImage(51<div tw="w-full h-full flex justify-start items-start text-[100px] bg-black text-white p-[50px]">52<div tw="flex flex-col items-start ">84}8586export async function iconImage(rounded = false) {87const roundedClass = rounded ? 'rounded-[96px]' : ''88return await ogImage(89<div tw={`w-full h-full flex justify-center items-center text-[100px] bg-[#7c65c1] text-white p-[50px] ${roundedClass}`}>90<img tw="w-[350px] h-[350px]" src={base64Icon(Mic)} />99//////////100101export async function ogImage(body, options = {}) {102const svg = await satori(body, {103width: 945,109if (code === 'emoji') {110const unicode = segment.codePointAt(0).toString(16).toUpperCase()111return `data:image/svg+xml;base64,` + btoa(await loadEmoji(unicode))112}113return ''145const base64 = Buffer.from(svg).toString('base64')146// console.log('getIconDataUrl', name, svg, base64)147return `data:image/svg+xml;base64,${base64}`148}149

FarcasterSpacesfarcaster.ts4 matches

5export const name = 'Spaces'6// export const iconUrl = "https://imgur.com/FgHOubn.png";7// export const ogImageUrl = "https://imgur.com/oLCWKFI.png";89export function embedMetadata(baseUrl: string, path: string = '/') {11return {12version: 'next',13imageUrl: baseUrl + '/image' + (channel ? '?channel=' + channel : ''),14button: {15title: name,18name: name,19url: baseUrl + path,20splashImageUrl: baseUrl + '/icon',21splashBackgroundColor: '#111111',22},47iconUrl: baseUrl + '/icon',48homeUrl: baseUrl,49splashImageUrl: baseUrl + '/icon?rounded=1',50splashBackgroundColor: '#111111',51subtitle: 'Live audio conversations',

testing-testingindex.html1 match

1112<!-- reference the webpage's favicon. note: currently only svg is supported in val town files -->13<!-- <link rel="icon" href="/favicon.svg" sizes="any" type="image/svg+xml"> -->1415<!-- import the webpage's javascript file -->

89const VISUAL_ANALYSIS_PROMPT = `10You are a master interior architect. Analyze the provided image of an empty room.11Your analysis must be concise and structured. Identify the room's key architectural features, light sources, flooring, and overall dimensions.12This information will be used by another AI to create a staging plan.20const STAGING_STRATEGY_PROMPT = `21You are an expert home staging strategist. You will receive an architectural analysis of a room, a desired design style, and the room's designated function.22Your task is to generate a comprehensive staging plan that will be used to create a photorealistic, virtually staged image. You must produce two outputs:231. A detailed, descriptive prompt for an image generation AI (DALL-E) to create the final image. This prompt must incorporate the original room's features to ensure architectural fidelity and describe the new furniture, layout, decor, and lighting in vivid detail to create a compelling, market-ready scene.242. A "Design Rationale" explaining to the client *why* this staging is effective for attracting buyers.25119font-family: 'Poppins', sans-serif;120appearance: none;121background-image: url("data:image/svg+xml,%3csvg xmlns='http://www.w3.org/2000/svg' viewBox='0 0 16 16'%3e%3cpath fill='none' stroke='%23343a40' stroke-linecap='round' stroke-linejoin='round' stroke-width='2' d='M2 5l6 6 6-6'/%3e%3c/svg%3e");122background-repeat: no-repeat;123background-position: right 0.75rem center;156#file-upload-label span { font-size: 1rem; color: var(--text-secondary); }157#file-upload-label strong { color: var(--accent-color); }158#image-preview-container {159width: 100%;160aspect-ratio: 4 / 3;164background-color: var(--bg-main);165}166#image-preview {167width: 100%;168height: 100%;188@keyframes spin { to { transform: rotate(360deg); } }189.staged-result { width: 100%; }190.image-comparison {191display: grid;192grid-template-columns: 1fr 1fr;194width: 100%;195}196.image-box { border-radius: 8px; overflow: hidden; border: 1px solid var(--border-color); }197.image-box img { width: 100%; display: block; }198.image-box h4 { text-align: center; padding: 0.75rem; background: var(--bg-main); }199.design-rationale {200width: 100%;222<div class="form-group">223<label for="file-upload" id="file-upload-label">224<span id="upload-text"><strong>Click to upload</strong> or drag & drop an image</span>225<div id="image-preview-container" style="display:none;">226<img id="image-preview" src="" alt="Room preview">227</div>228</label>229<input type="file" id="file-upload" accept="image/*" style="display:none;" required>230</div>231<div class="form-group">280const stagedResultContainer = $('#staged-result');281const fileInput = $('#file-upload');282const imagePreview = $('#image-preview');283const imagePreviewContainer = $('#image-preview-container');284const uploadText = $('#upload-text');285286let originalImageBase64 = null;287let originalImageSrc = null;288289async function handleApiRequest(payload) {319function renderResults(data) {320stagedResultContainer.innerHTML = \`321<div class="image-comparison">322<div class="image-box">323<h4>Before</h4>324<img src="\${originalImageSrc}" alt="Original empty room">325</div>326<div class="image-box">327<h4>After</h4>328<img src="\${data.staged_image_url}" alt="AI Staged Room">329</div>330</div>344form.addEventListener('submit', (e) => {345e.preventDefault();346if (!originalImageBase64) {347alert('Please upload an image of the room first.');348return;349}350const payload = {351image: originalImageBase64,352room_type: $('#room-type').value,353style: $('#style-type').value,362const reader = new FileReader();363reader.onload = (event) => {364originalImageSrc = event.target.result;365imagePreview.src = originalImageSrc;366imagePreviewContainer.style.display = 'block';367uploadText.style.display = 'none';368// Store just the base64 part for the API call369originalImageBase64 = originalImageSrc.split(',')[1];370};371reader.readAsDataURL(file);399throw new Error("Invalid action specified.");400}401if (!body.image) {402throw new Error("Image data is missing from the request.");403}404405// STEP 1: Analyze the image with GPT-4o Vision to understand its architecture.406const analysisCompletion = await openai.chat.completions.create({407model: "gpt-4o",412content: [413{ type: "text", text: "Analyze the architectural features of this empty room." },414{ type: "image_url", image_url: { url: `data:image/jpeg;base64,${body.image}` } },415],416},437const { dalle_prompt, design_rationale } = strategyResult;438439// STEP 3 (CORRECTED): Generate the final, staged image using the openai.createImage method,440// which is appropriate for the older SDK version wrapped by std/openai?v=4.441const imageResponse = await openai.createImage({442prompt: dalle_prompt,443n: 1,445});446447const stagedImageUrl = imageResponse.data[0].url;448449// Return the complete package to the frontend.450return new Response(451JSON.stringify({452staged_image_url: stagedImageUrl,453design_rationale: design_rationale,454}),

stevensDemoREADME.md1 match

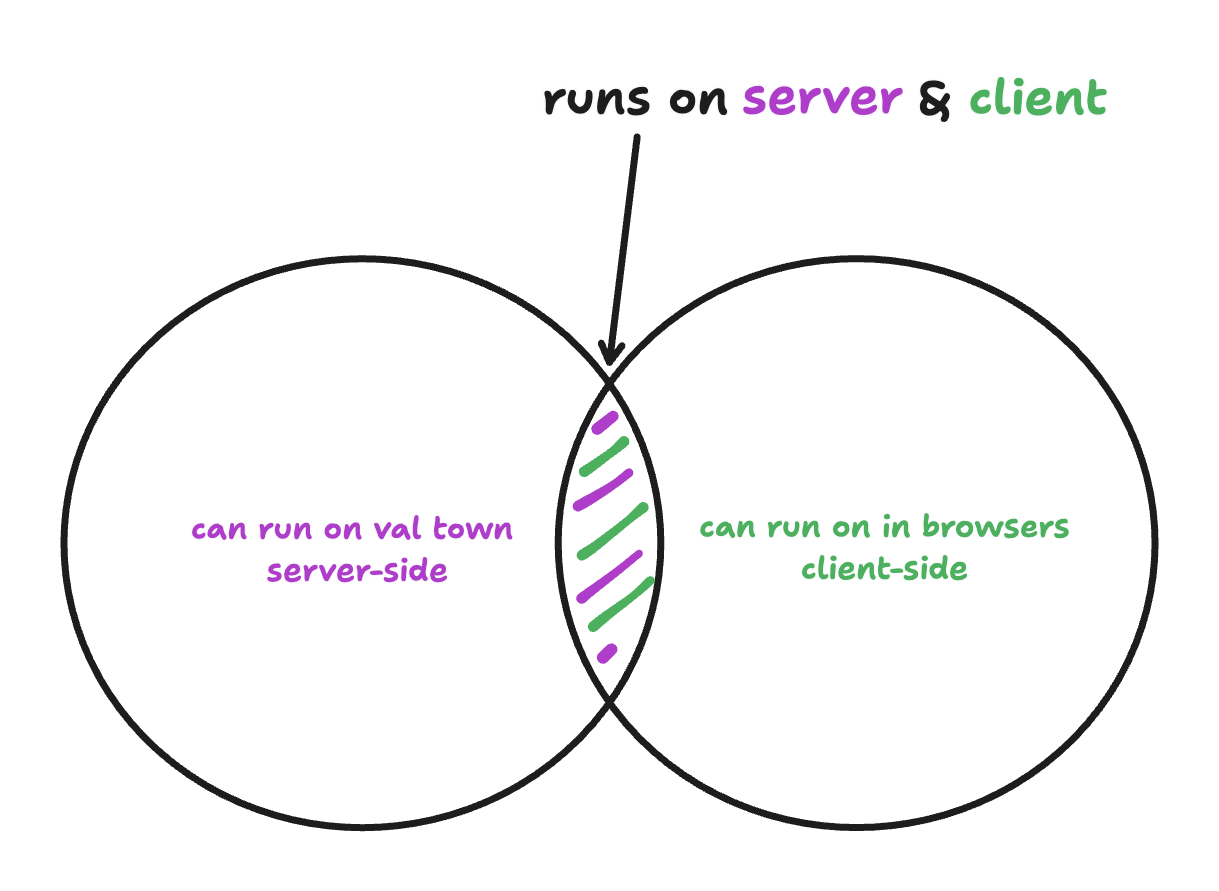

3It's common to have code and types that are needed on both the frontend and the backend. It's important that you write this code in a particularly defensive way because it's limited by what both environments support:4567For example, you *cannot* use the `Deno` keyword. For imports, you can't use `npm:` specifiers, so we reccomend `https://esm.sh` because it works on the server & client. You *can* use TypeScript because that is transpiled in `/backend/index.ts` for the frontend. Most code that works on the frontend tends to work in Deno, because Deno is designed to support "web-standards", but there are definitely edge cases to look out for.

stevensDemoREADME.md1 match

21## `favicon.svg`2223As of this writing Val Town only supports text files, which is why the favicon is an SVG and not an .ico or any other binary image format. If you need binary file storage, check out [Blob Storage](https://docs.val.town/std/blob/).2425## `components/`

stevensDemoindex.ts15 matches

73});7475// --- Blob Image Serving Routes ---7677// GET /api/images/:filename - Serve images from blob storage78app.get("/api/images/:filename", async (c) => {79const filename = c.req.param("filename");8081try {82// Get image data from blob storage83const imageData = await blob.get(filename);8485if (!imageData) {86return c.json({ error: "Image not found" }, 404);87}8890let contentType = "application/octet-stream"; // Default91if (filename.endsWith(".jpg") || filename.endsWith(".jpeg")) {92contentType = "image/jpeg";93} else if (filename.endsWith(".png")) {94contentType = "image/png";95} else if (filename.endsWith(".gif")) {96contentType = "image/gif";97} else if (filename.endsWith(".svg")) {98contentType = "image/svg+xml";99}100101// Return the image with appropriate headers102return new Response(imageData, {103headers: {104"Content-Type": contentType,107});108} catch (error) {109console.error(`Error serving image ${filename}:`, error);110return c.json(111{ error: "Failed to load image", details: error.message },112500,113);

stevensDemoindex.html3 matches

10href="/public/favicon.svg"11sizes="any"12type="image/svg+xml"13/>14<link rel="preconnect" href="https://fonts.googleapis.com" />36height: 100%;37font-family: "Pixelify Sans", sans-serif;38image-rendering: pixelated;39}40body::before {50/* For pixel art aesthetic */51* {52image-rendering: pixelated;53}54</style>

stevensDemohandleUSPSEmail.ts12 matches

12}1314type ImageSummary = {15sender: string;16recipient: (typeof RECIPIENTS)[number] | "both" | "other";22anthropic: Anthropic,23htmlContent: string,24imageSummaries: ImageSummary[]25) {26try {36text: `Analyze the following content from an email and provide a response as a JSON blob (only JSON, no other text) with two parts.3738The email is from the USPS showing mail I'm receiving. Metadata about packages is stored directly in the email. Info about mail pieces is in images, so I've included summaries of those as well.3940Your response should include:66And here is info about the mail pieces:6768${JSON.stringify(imageSummaries)}`,69},70],95const anthropic = new Anthropic({ apiKey });9697// Process each image attachment serially98const summaries = [];99for (const [index, attachment] of e.attachments.entries()) {100try {101const imageData = await attachment.arrayBuffer();102const base64Image = btoa(103String.fromCharCode(...new Uint8Array(imageData))104);105112content: [113{114type: "image",115source: {116type: "base64",117media_type: attachment.type,118data: base64Image,119},120},148summaries.push(parsedResponse);149} catch (error) {150console.error(`Image analysis error:`, error);151summaries.push({152sender: "Error",153recipient: "Error",154type: "error",155notes: `Image ${index + 1} Analysis Failed: ${error.message}`,156});157}