untitled-8620main.ts1 match

167<meta name="viewport" content="width=device-width, initial-scale=1.0">168<title>Prerequisite Tree Generator</title>169<link rel="icon" href="data:image/svg+xml,<svg xmlns=%22http://www.w3.org/2000/svg%22 viewBox=%220 0 100 100%22><text y=%22.9em%22 font-size=%2290%22>🌳</text></svg>">170<script src="https://unpkg.com/react@18/umd/react.development.js"></script>171<script src="https://unpkg.com/react-dom@18/umd/react-dom.development.js"></script>

untitled-8264main.ts2 matches

209gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);210gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);211gl.texImage2D(gl.TEXTURE_2D, 0, gl.R8, numBars, 1, 0, gl.RED, gl.UNSIGNED_BYTE, null);212213// Matrix helpers263gl.activeTexture(gl.TEXTURE0);264gl.bindTexture(gl.TEXTURE_2D, audioTex);265gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, numBars, 1, gl.RED, gl.UNSIGNED_BYTE, fftBuffer);266}267

130131#### **Content Rendering**132- **Rich Notion Blocks**: Supports headings, paragraphs, lists, code blocks, callouts, images, videos, tables, and more133- **Enhanced Code Blocks**: Syntax highlighting powered by Prism.js with support for 30+ languages, line numbers, and language indicators (optimized for performance with non-blocking JavaScript loading)134- **Property Display**: Shows all page properties with type-specific formatting and icons

blob_adminmain.tsx3 matches

5import { Home } from "./Home.tsx";6import { BlobPage } from "./BlobPage.tsx";7import { isImage, isJSON, isText } from "./utils.ts";8import { Layout } from "./Layout.tsx";9import { NewPage } from "./NewPage.tsx";78}79const metadata = matches[0];80if (isImage(metadata.key)) {81return c.html(<BlobPage name={key} metadata={metadata} type="image" />);82}83if (metadata.size < 1024 || isText(metadata.key)) { // is text or small

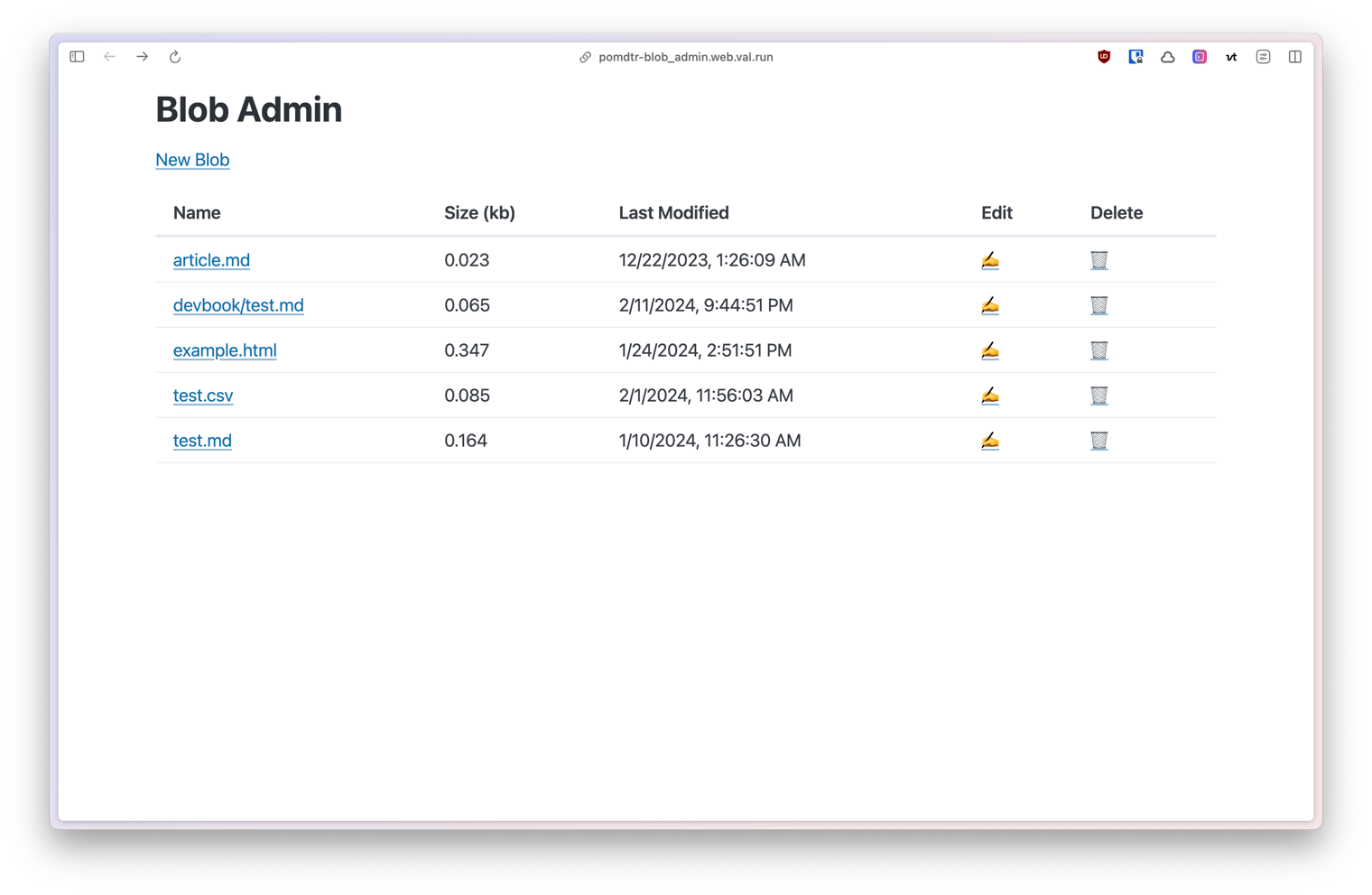

blob_adminREADME.md2 matches

4This is a lightweight Blob Admin interface to view and debug your Blob data.5678## Installation31- **Authentication**: Secure access with lastlogin middleware32- **File Type Support**:33- **Images**: Display inline with preview34- **Text Files**: Editable in textarea with syntax highlighting35- **JSON Files**: Interactive JSON viewer with collapsible nodes and edit capability

blob_adminutils.ts3 matches

1const COMMON_IMAGE_EXTENSIONS = [2"jpg",3"jpeg",45];4647export function isImage(path: string) {48return COMMON_IMAGE_EXTENSIONS.includes(path.split(".").pop());49}50

blob_adminBlobPage.tsx1 match

92</form>93)}94{type === "image" && <img src={`/raw/${encodeURIComponent(name)}`} />}95</Layout>96);

78<br/>79<br/>80<div class="time-bar" onclick="loadImage()" id="timeBar">加载中...</div>81<div>82<img id="preview" src="" />83</div><input type="file" id="fileInput" accept="image/*" style="display:none;">8485<div id="barcodeWrapper">9293window.counter = 0;94function loadImage(){95window.counter++;96if(window.counter > 6 ){

NowPlayingGrabbermain.tsx8 matches

39if (response.status > 400) {40const shortenedName = "Error (Forbidden)";41const image = "/assets/spotify.svg";42return { shortenedName, image };43} else if (response.status === 204) {44const shortenedName = "Currently Not Playing";45const image = "/assets/spotify.svg";46return { shortenedName, image };47}4849const song = await response.json();50const image = song.item.album.images[0].url;51const artistNames = song.item.artists.map(a => a.name);52const link = song.item.external_urls.spotify;65formattedArtist,66artistLink,67image,68};69} catch (error) {70const shortenedName = "Error";71const image = "/assets/spotify.svg";72return { shortenedName, image };73}74};

GLM-4-5-Omnimain.tsx27 matches

5const INPUT_TOKEN_COST = 500;6const OUTPUT_TOKEN_COST = 500;7const INPUT_IMAGE_COST = 750;8const INPUT_VIDEO_COST = 1000;9const INPUT_FILE_COST = 750;100]);101102const SUPPORTED_IMAGE_EXTENSIONS = new Set([103".jpg",104".jpeg",148interface ProcessedFile {149name: string;150type: "text" | "image" | "video" | "document" | "unknown";151content: string;152metadata: {176if (SUPPORTED_TEXT_EXTENSIONS.has(extension)) {177return this.processTextFile(buffer, name, metadata);178} else if (SUPPORTED_IMAGE_EXTENSIONS.has(extension)) {179return this.processImageFile(buffer, name, metadata);180} else if (SUPPORTED_VIDEO_EXTENSIONS.has(extension)) {181return this.processVideoFile(buffer, name, metadata);231}232233static processImageFile(234buffer: Uint8Array,235name: string,240return {241name,242type: "image",243content:244`Image File: ${name}\nFormat: ${metadata.extension.toUpperCase()}\nSize: ${metadata.size} bytes\nBase64 Data Available: Yes`,245metadata: { ...metadata, base64 },246};339const responses = {340"which model are you":341"I'm an omni-capable AI assistant that can process text, images, videos, and documents using various state-of-the-art language models.",342"which version are you":343"I'm an omni-capable AI assistant powered by multiple advanced language models including DeepSeek, Llama-4, and others.",345"I'm functioning optimally and ready to help with your questions and file processing needs.",346"what can you do":347"I can analyze text, images, videos, and documents. I can also engage in conversations, answer questions, write code, and help with various tasks.",348};349373attachmentContext += `\n\n--- ${processed.name} ---\n${processed.content}`;374375if (processed.type === "image" && processed.metadata.base64) {376attachmentContext += `\n[Base64 image data available for vision models]`;377}378}389for (const attachment of attachments) {390const extension = FileProcessor.getExtension(attachment.name);391if (SUPPORTED_IMAGE_EXTENSIONS.has(`.${extension}`)) {392try {393const response = await fetch(attachment.url);396397messageContent.push({398type: "image_url",399_image_url: {400url: `data:${attachment.content_type};base64,${base64}`,401},402get image_url() {403return this._image_url;404},405set image_url(value) {406this._image_url = value;407},408});409} catch (error) {410console.error(`Failed to process image ${attachment.name}:`, error);411}412}421attachments: FileAttachment[] = [],422): Promise<Response> {423const hasImages = attachments.some((att) =>424SUPPORTED_IMAGE_EXTENSIONS.has(`.${FileProcessor.getExtension(att.name)}`)425);426427let finalMessages = messages;428429if (hasImages && config.supportsVision && messages.length > 0) {430const lastMessage = messages[messages.length - 1];431if (lastMessage.role === "user") {524for (const attachment of msg.attachments) {525const ext = `.${FileProcessor.getExtension(attachment.name)}`;526if (SUPPORTED_IMAGE_EXTENSIONS.has(ext)) {527inputCost += INPUT_IMAGE_COST;528} else if (SUPPORTED_VIDEO_EXTENSIONS.has(ext)) {529inputCost += INPUT_VIDEO_COST;565send("text", {566text:567"Hi! I'm an omni-capable AI assistant. I can help you with text, images, videos, and documents.",568});569send("done", {});646`| Input Text | ${INPUT_TOKEN_COST} points/1k tokens |\n` +647`| Output Text | ${OUTPUT_TOKEN_COST} points/1k tokens |\n` +648`| Input Image | ${INPUT_IMAGE_COST} points/file |\n` +649`| Input Video | ${INPUT_VIDEO_COST} points/file |\n` +650`| Input Document | ${INPUT_DOCUMENT_COST} points/file |\n` +